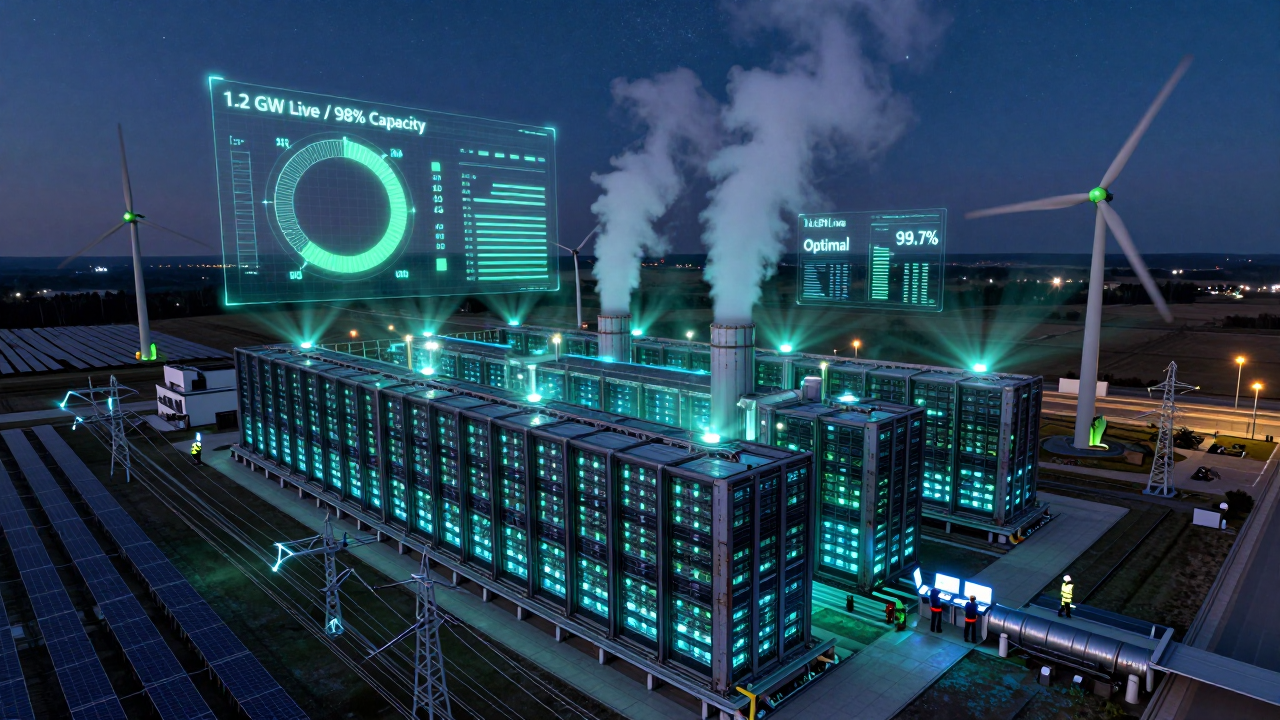

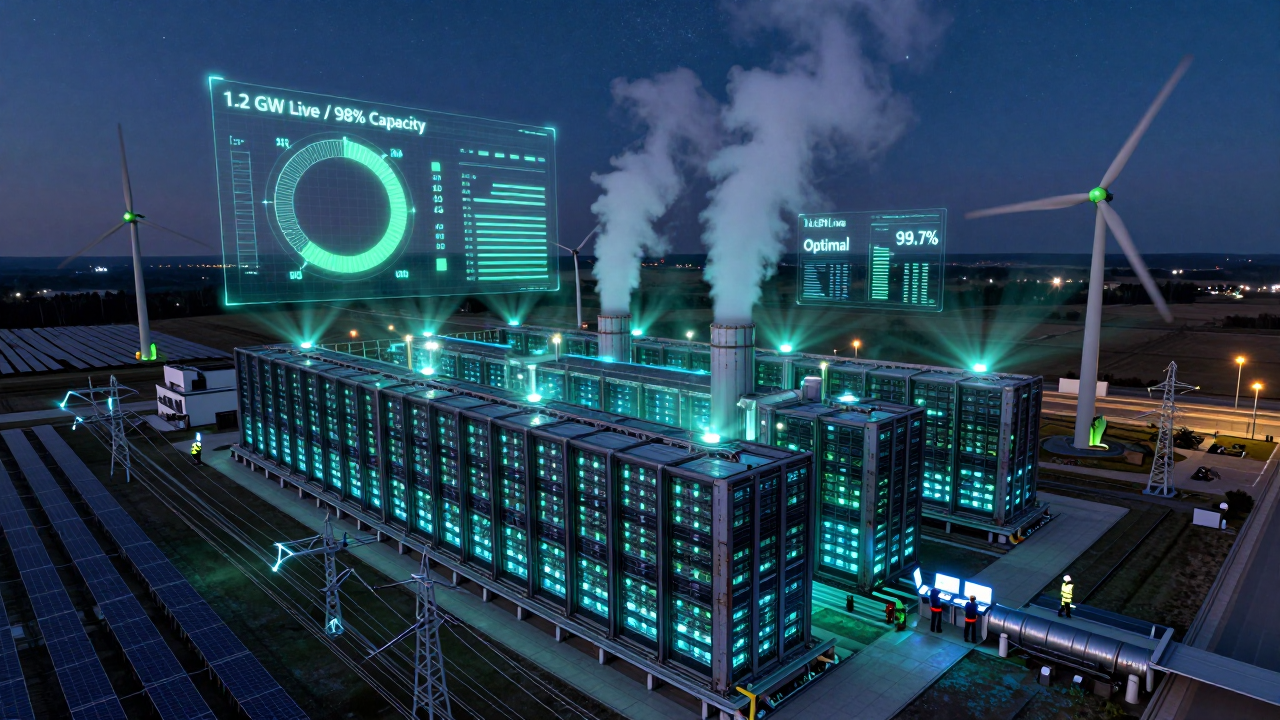

The numbers are almost incomprehensible: $602 billion in hyperscaler CapEx for 2026, a 36% year-over-year increase. OpenAI's Stargate project envisions $850 billion in AI infrastructure. Microsoft's Wisconsin facility will house "hundreds of thousands" of NVIDIA chips. AI data center power consumption is projected to double from 536 TWh (2025) to 1,072 TWh (2030). This is the largest infrastructure buildout since the internet itself—and it's reshaping everything from power grids to network architecture.

The Scale of Investment

Hyperscaler CapEx (2024-2027)

| Year | Total CapEx | AI Infrastructure | YoY Growth |

|---|

| 2024 | $280B | $168B (60%) | - |

| 2025 | $442B | $310B (70%) | +58% |

| 2026 | $602B | $452B (75%) | +36% |

| 2027E | $720B | $576B (80%) | +20% |

Major Projects Announced

| Project | Investment | Company | Scale |

|---|

| Stargate | $850B total | OpenAI/SoftBank | Multi-site US buildout |

| Wisconsin AI DC | $10B+ | Microsoft | 100,000s of NVIDIA GPUs |

| Google Cloud AI | $8B | Google | Multiple facilities |

| AWS AI expansion | $12B | Amazon | Global infrastructure |

| Meta AI data centers | $15B | Meta | AI training focused |

The Power Crisis

The Physics Problem

| System | Power per Rack | Density Increase |

|---|

| Traditional server | 5-15 kW | Baseline |

| GPU compute (A100) | 20-30 kW | 2-3x |

| GPU compute (H100) | 40-60 kW | 4-6x |

| GPU compute (B200) | 70-100+ kW | 7-10x |

: A single AI rack now consumes what an entire row of traditional servers used.

Power Consumption Projections

| Year | AI DC Power (TWh) | % of Global Electricity |

|---|

| 2024 | 380 | 1.5% |

| 2025 | 536 | 2.0% |

| 2030 | 1,072 | 3.5% |

: AI data centers will consume more electricity than many countries.

Grid Constraints Are Real

| Location | Issue | Impact |

|---|

| Northern Virginia | New connections paused until 2026 | Project delays |

| Dublin | Moratorium on new DCs | Geographic limits |

| Singapore | New DC restrictions | Supply constraints |

| Netherlands | Grid connection delays | 18+ month waits |

: Data center siting is now primarily a power availability decision.

Cooling: The New Frontier

Why Cooling Matters More

| Generation | Heat per Chip | Cooling Method |

|---|

| Traditional CPU | 200-300W | Air cooling |

| A100 GPU | 400W | Air/hybrid |

| H100 GPU | 700W | Liquid required |

| B200 GPU | 1000W+ | Liquid essential |

Cooling Technologies Emerging

| Technology | Efficiency | Deployment |

|---|

| Traditional air | Baseline | Legacy |

| Rear-door heat exchangers | +20% | Growing |

| Direct-to-chip liquid | +40% | Standard for AI |

| Immersion cooling | +50% | Emerging |

The Infrastructure Change

Traditional data center:

- CRAC units on floor

- Raised floor air delivery

- Hot/cold aisle containment

AI data center:

- Liquid cooling distribution

- Cooling distribution units (CDUs)

- Chip-level heat extraction

- Often outdoor heat rejection

Network Architecture Revolution

The Bandwidth Challenge

| Workload | Network Requirement |

|---|

| Traditional web | 1-10 Gbps per server |

| AI training | 400-800 Gbps per GPU |

| Multi-node training | Aggregate Tbps per job |

Why Network Design Now Matters Most

Deloitte's insight: "Network design, not just compute, will define winners"

| Component | AI DC Requirement |

|---|

| GPU-to-GPU fabric | InfiniBand or RoCE |

| Latency | Sub-microsecond |

| Bandwidth | 400G-800G per port |

| Topology | Non-blocking, low-oversubscription |

| Scale | 100,000s of GPUs in single fabric |

NVIDIA's Networking Dominance

| Technology | Purpose | Market Position |

|---|

| InfiniBand | GPU fabric | ~90% AI training |

| Spectrum-X | Ethernet for AI | Growing Ethernet alternative |

| NVLink | GPU-to-GPU | Proprietary, fastest |

| ConnectX | NICs | Industry standard |

The Stargate Vision

What OpenAI Announced

| Element | Details |

|---|

| Total investment | $850 billion over multiple years |

| Partners | SoftBank, Oracle, others |

| Focus | AI training and inference |

| Scale | Largest AI infrastructure project |

| Location | United States |

What It Would Mean

| Metric | Stargate Scale |

|---|

| GPU capacity | Millions of chips |

| Power consumption | Multi-GW (equivalent to large cities) |

| Jobs | Tens of thousands |

| US AI capacity | Massive increase |

Skeptical Takes

| Concern | Validity |

|---|

| Funding certainty | Partnership details vague |

| Power availability | US grid constraints real |

| Demand justification | Assumes continued AI scaling benefits |

| Timeline | Multi-year, subject to change |

What This Means for Developers

Cloud Capacity Implications

| Factor | Developer Impact |

|---|

| GPU availability | Improving but still constrained |

| Pricing | Gradually decreasing |

| Geographic options | Expanding |

| Latency options | More edge inference locations |

Infrastructure Skills in Demand

| Skill | Demand Level | Context |

|---|

| MLOps | Very High | Production AI systems |

| Distributed training | High | Multi-node AI jobs |

| GPU optimization | Very High | Efficient compute use |

| Infrastructure as code | High | Automated provisioning |

| Networking (AI fabric) | Growing | Specialized demand |

Cost Optimization Strategies

For training:

- Spot instances when possible

- Efficient checkpoint strategies

- Mixed-precision training

- Distributed training optimization

For inference:

- Model quantization (FP8, INT8)

- Batching optimization

- Edge deployment when latency allows

- Multi-tenant serving

Regional Implications

US

| Factor | Status |

|---|

| Investment | Largest recipient |

| Power | Grid upgrades needed |

| Talent | Concentrated in tech hubs |

| Policy | CHIPS Act support |

Europe

| Factor | Status |

|---|

| Investment | Growing but behind US |

| Power | Renewable focus, but grid limits |

| Regulation | AI Act compliance requirements |

| Sovereignty | Push for domestic capacity |

Asia

| Factor | Status |

|---|

| China | Building despite chip restrictions |

| Japan | SoftBank-led expansion |

| Singapore | Supply constrained |

| India | Major growth opportunity |

Middle East

| Factor | Status |

|---|

| Investment | Massive ($100B+ announced) |

| Power | Abundant energy |

| Talent | Importing expertise |

| Projects | Transcendence (Saudi Arabia) |

The Environmental Question

Power Mix Concerns

| Company | Renewable Target | Current Status |

|---|

| Google | 24/7 carbon-free by 2030 | ~65% |

| Microsoft | 100% renewable by 2025 | ~80% |

| AWS | 100% renewable by 2025 | ~85% |

| Meta | Net zero by 2030 | ~75% |

The Reality

- Renewable commitments are often accounting-based (buying credits)

- Real-time matching of consumption to renewable generation is harder

- AI's power surge is faster than renewable buildout

- Some facilities run on fossil-fuel backup frequently

Efficiency Efforts

| Approach | Impact |

|---|

| PUE improvements | Incremental (1.4 → 1.1) |

| Liquid cooling | ~40% efficiency gain |

| Model efficiency | 10-100x improvements possible |

| Hardware efficiency | Each generation improves |

Investment Perspectives

The Bull Case

- AI demand is real and growing

- Infrastructure is years behind demand

- Margins for hyperscalers are strong

- Power constraints create moats

The Bear Case

- $850B is speculative commitment

- AI efficiency gains may reduce compute needs

- Power constraints could limit growth

- Economic downturn would pause investment

Who Benefits

| Sector | Companies | Outlook |

|---|

| Chips | NVIDIA, AMD, Broadcom | Strong |

| Networking | NVIDIA, Arista, Cisco | Strong |

| Power | Utilities, generators | Growing |

| Cooling | Vertiv, Schneider | Strong |

| Construction | DC builders | Strong |

| Real estate | DC REITs | Mixed |

What to Watch in 2026

Q1-Q2

- Stargate partnership details and initial sites

- Hyperscaler earnings for capacity guidance

- Power availability announcements

Q3-Q4

- Next-gen GPU deployments (B200, etc.)

- Cooling technology adoption rates

- Regional capacity expansion

Indicators to Track

| Indicator | Signal |

|---|

| GPU delivery times | Demand vs. supply |

| Cloud GPU pricing | Capacity availability |

| Power contract pricing | Infrastructure costs |

| Data center construction starts | Future capacity |

Practical Implications

For Startups

- Budget for compute as significant expense line

- Optimize early - inefficiency costs scale

- Consider on-prem for predictable large workloads

- Geographic flexibility for best pricing

For Enterprise

- Lock in capacity with cloud commitments

- Invest in MLOps to maximize efficiency

- Consider hybrid approaches

- Track power costs as they'll pass through

For Developers

- Learn infrastructure alongside ML

- Optimization skills are high-value

- Understand networking for distributed systems

- Follow the hardware roadmap

Conclusion

The $850 billion AI infrastructure buildout isn't just about more compute—it's about fundamentally reimagining data center design for a power-hungry, network-intensive AI era.

For developers, this means:

- More capacity is coming, but constraints persist in 2026

- Efficiency skills become differentiating

- Infrastructure knowledge is increasingly valuable

- Geographic flexibility provides cost advantages

For the industry:

- Power is the new bottleneck, not just chips

- Network architecture matters as much as compute

- Cooling technology is a competitive differentiator

- Sustainability claims need scrutiny

The infrastructure buildout of 2024-2030 will determine who can run AI at scale. Understanding these dynamics isn't optional for anyone serious about AI's future.

Sources:

- Deloitte Tech Trends 2026

- CNBC Data Center Analysis

- Data Center Frontier Industry Reports

- Hyperscaler earnings reports and announcements